Scientific software metrics with Depsy – a great new tool!

As a fellow of the Software Sustainability Institute, I have talked a lot about how important software is in science, and how we need to make sure we recognise its contribution. Writing good scientific software takes a lot of work, and can have a lot of impact on the community, but it tends not to be recognised anywhere near as much as writing papers. This needs to change!

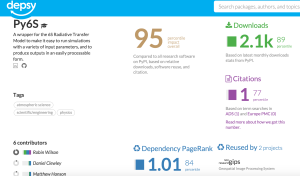

Depsy, a new project run by ImpactStory, have developed an awesome new tool which allows you to investigate impact metrics for scientific software which has been published to the Python and R package indexes (PyPI and CRAN respectively). For example, if you go to the page for Py6S, you see something like this (click to enlarge):

This gives various statistics about my Py6S library, such as number of downloads, number of citations, and the PageRank value in the dependency network. However, far more importantly it gives the percentiles for each of these too. This is particularly useful for non-computing readers who may have absolutely no idea how many downloads to expect for a scientific Python library (what counts as "good"? 20 downloads? 20,000 downloads?). Here, they can see that Py6S is at the 89th percentile for downloads, 77th for citations and 84th for dependencies, and has an overall ‘impact’ percentile of 95%.

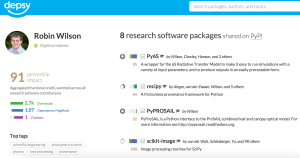

As well as making me feel nice and warm inside, this gives me some actual numbers to show that the time I spent on Py6S hasn’t been wasted, and it has actually had an impact. You can take this further and click on my name on the bottom left, and get to my metrics page, which shows that I am at the 91st percentile for impact…again something that makes me feel good, but can also be used in job applications, etc.

I won’t tell you how long I spent browsing around various projects and people that I know, but it was far too long, when I really should have been doing something else (fixing bugs in an algorithm, I think) instead.

Anyway, I thought I’d finish this post by summarising a few of the brilliant things about Depsy, and a few of the areas that could be improved. I’ve discussed all of the latter issues with the authors, and they are very keen to continue working on Depsy and improve it – and are currently actively seeking funding to do this. So, if you happen to have lots of money, please throw it their way!

So, great things:

- The percentiles. I’ve talked about this above, but they’re really great. (I think percentiles should be used a lot more in life generally, but that’s another blog post…)

- Their incredibly detailed about page, which links to huge amounts of information on how every statistic is calculated

- The open-source code that powers it, which allows you to check their algorithms and hack around with it (yes, I did spend a little while checking to make sure that they weren’t vulnerable to Little Bobby Tables attacks)

- The responsiveness of the development team: Jason and Heather have responded to my emails quickly, and taken on board lots of my comments already.

A few things that will be improved in the future, and a few more general concerns:

- Currently they try to guess whether a library is research software or not, but it seems to get a fair few things wrong. I believe they’re actively working on this.

- Citation searching is a bit dodgy at the moment. For example, it shows one citation for Py6S, but actually there is a paper on Py6S which has seven citations on Google Scholar. My idea for CITATION files could help them to link up packages and papers – so that’s another reason to add a CITATION file to your project!

- Not all scientific software is in Python and R, or hosted on Github, so they are hoping to expand their sources in the future.

- More generally, the issue with all metrics is that they never quite manage to capture what you’re interested in. Unfortunately, metrics are a fact of life in academia – but we need to be careful that we don’t start blindly applying ‘software impact metrics’ in unsuitable situations. Still, even if these metrics do start being used stupidly, they’re definitely better than the Impact Factor (after all, we know how they’re calculated for a start!)

So, enough waffling from me: go and check out Depsy, if you find any problems then post an issue on Github – and tell your friends!

If you found this post useful, please consider buying me a coffee.

This post originally appeared on Robin's Blog.

Categorised as: Academic, Programming, Python

Leave a Reply